Bringing Nightmares to life

In 2018 Leonie Sedman (Curator of Heritage and Collections Care) installed a new display of wet collection specimens in the Tate Hall Museum. The exhibition is aptly named ‘Nightmares in a Bell Jar’ and the creatures on display vary from repulsive reptiles to frightening fish … and the kids love them!

One thing that the Visitor Services Team has noticed while patrolling the floors over the years is that children love disgusting and creepy objects and we’re happy to say we have quite a few of those on display! Questions that the children kept asking about our creatures were ‘Are they real?’ and ‘Why aren’t they moving?’.

Nightmares in a Bell Jar display in the Tate Hall Museum

Instead of relying on traditional object labels we started to think of new ways to engage with our visitors and what could be better than bringing our 20th century zoology specimens back to life by using augmented reality in the 21st Century?

We wanted to educate both our younger and older visitors alike, but to do it in a fun, unique and engaging way. We had the opportunity to test an augmented reality app as part of the BBC Civilisations Festival and being able to interact with an object on a mobile device encouraged us to look closer, stay longer and find out more while having fun.

We had previously worked with the University of Liverpool’s Mobile Development Team for the ‘VG&M iPad Tour’ and so we approached them again to see if a new app could be produced for our zoology exhibition. The project team consisted of Sally Shaw (Team Leader), Chris Rodenhurst (Designer and artist), John Gilbertson (Android Developer) and Carl Knott (iOS Developer) with support from Nicola Euston (Head of Museums and Galleries), Leonie Sedman (Curator of Heritage and Collections Care), Kieran Ferguson and myself from the VG&M (Visitor Services).

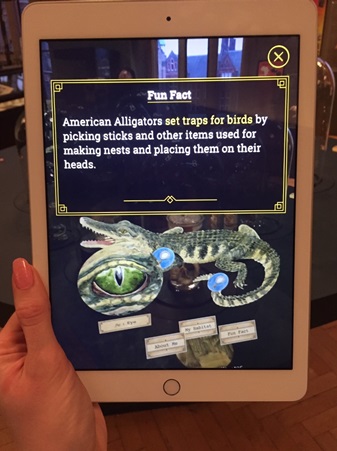

Although we originally had ideas of incorporating videos into the app, we settled on a design that would implement augmented reality alongside original artwork and interesting facts and it took the project team around 3 months to produce while they worked on other projects for the university. The VG&M team began by researching facts about each of the specimens on display and decided on three information sections for each creature: ‘About Me,’ ‘My Habitat’ and ‘Fun Fact.’ Once we had gathered all of our information, the Mobile Development Team could begin mastering new skills for Augmented Reality and Chris could start painting and animating the creatures.

Chris painting the creatures

In the finished app, each creature has been animated so that it subtly moves on screen and appears outside of the bell jar display cases – making it more accessible and engaging. Over the years, our specimens have gradually lost their natural colours and distinctive markings due to the chemicals from the preservation process. The app and original drawings now enables our visitors to see what these creatures really look like in the wild.

The user can move their phone or tablet closer to view the drawings in more detail and find out more information about each creature by selecting different options.

We asked the Mobile Development Team a few questions about the app designing process:

How did this project come about?

Sally: The team had already developed a ‘points of interest’ app for the VG&M, which uses location-based technology to facilitate guided tours of the building. We were asked to add videos and information to this app, to bring to life the Wet Collection. At the time we were exploring new technologies such as Augmented Reality (AR), so we suggested using the Wet Collection as the subject of a brand new and exciting app – this became ‘Nightmares in a Bell Jar’.

What was the most challenging part of this project?

Chris: Painting the markers for the exhibition table was the most challenging part of the project. We were never completely sure how the app was seeing them, so there was a bit of guess work involved in designing the scenes. I also wanted to create images that were intriguing, without giving anything away. It was difficult composing scenes without a strong, obvious subject.

John: Learning the AR framework, it’s a completely new technology that we’d not experimented with before.

Carl: Learning the AR framework and a 3D gaming engine, Scenekit (3D vector math was a little tricky). Optimising and integrating Spritekit and Scenekit together, memory management was a hurdle - difficult to track down zombie objects, had to drill into Spritekit to release huge animation textures.

John testing the Android version in the office

What was the most enjoyable part?

Chris: Painting the animals was the most enjoyable part of the process. I wanted the illustrations to look like nineteenth century designs from Victorian natural history books, so the paintings had to be detailed and realistic. I had a lot of fun figuring out the animals. Using watercolours can be a bit nerve-wracking though, as they’re quite unpredictable and it can be hard to fix a painting if something goes wrong. The longer a painting takes, the more nervous you become about making a mistake!

John: Learning the AR framework and discovering all the possibilities for both this project and future ones.

Carl: Learning and researching new technology, optimising code to achieve fast rendering and getting consistent 60fps.

How many new skills did the team learn along the way?

Chris: I learned how to compose interactive scenes in 3D space. There was a lot of trial and error involved in placing the various parts of the scenes so that the app would be intuitive as well as surprising and engaging.

John: AR technology, re-learning 3D vector maths and new image compression formats.

Carl: ARKit, Scenekit and Spritekit.

The team test out the app at the VG&M

How long did it take you to complete all of the code?

John and Carl: About 5-6 weeks in total, alongside other projects.

Carl working on the IOS version

Chris, could you tell me a bit about your art background - did you study it at school, is it just a hobby that you enjoy?

Chris: I’ve enjoyed art since I was little. I studied art and design in college, and I have a degree in Multimedia Arts. I’ve worked as an artist for a videogame company and for design agencies, I’ve freelanced as an illustrator and taught design and illustration at John Moores University. I carry a sketchbook around with me and draw every day – people on the bus, bits of architecture, and ideas for apps!

John and Carl, could you tell me a bit about your programming background?

John: I did a degree in Computer Science, but taught myself Android Programming on the job with the help of a short introductory course a few years ago.

Carl: I have a Software Engineering degree & masters, with 10 years’ experience of iOS development and 3 years’ experience of .net.

Chris, what was your favourite creature to draw and why?

Chris: I think the sea urchin was my favourite, because it was so strange and difficult to figure out. The sea urchin has lots of odd shapes and textures.

Original artwork

Which creature was the most interesting to research?

Chris: The horseshoe crab is amazing. I never knew they had blue blood, or that they were farmed for the medicinal qualities of their blood. I didn’t realise they weren’t even crabs at all. Their mouth is in the middle of their bodies and they use the bases of their legs to eat. I watched lots of videos of them eating to figure out how to animate their mouths.

Did any of the creatures give you nightmares?

Chris: Researching all the parasites was pretty awful but I think the tongue worm was probably the worst. The particular species in the VGM exhibition lives in the lungs of snakes and feeds off its host’s blood. In order to paint an animal correctly, you have to try and understand how it works, which in this case meant spending a long time figuring out where to put the hooks!

How difficult was it to animate the images?

Chris: There were lots of challenges involved in animating the animals. They had to be cut out in Photoshop and then any elements that were to be animated, claws and legs etc., had to be cut out separately. I then had to cover all the gaps, so there’d be no holes when the animal moved. Sometimes I painted new sections to fix the holes, sometimes I used something called a clone tool in Photoshop to reuse existing parts of the painting.

I put the animations together in After Effects. In order to reduce the file size and make sure the app ran smoothly, the animations had to work as short looping sections. Each one is under two seconds long. It was a challenge to make believable animations under those constraints. The easiest creatures were the ones that already had jointed legs and claws and moved like little robots – things like the crab and the bug. The alligator was difficult because they don’t usually move quickly at all!

Chris animating images for the app

What other projects will you be utilising these new skills on?

Sally: We’re hoping to make more Augmented Reality apps using the technology we’ve developed for this project – watch this space!

Head of Museums & Galleries, Nicola Euston, said “this app has been really fun to develop and Sally’s team have been fantastic throughout the whole process, explaining clearly what was required from us and keeping us informed throughout the development process. We’re really excited about this app and are keen to work with Sally’s team in future on developing other apps linked to our collections and displays.”

The ‘Nightmares in a Bell Jar’ app

Download the app

The app is now available on Apple (iOS 11+) and Android (7+) and can be downloaded from the University of Liverpool App Store and if you don’t have your own mobile device, a tablet is available to borrow from the VG&M Visitor Services Team in the Tate Hall Museum.